Run processes in the background

Suppose you have some long running script (we’ll use Python here as an example)

1 | import time |

and you have launched it in active terminal session like this python script.py (or poetry run python script.py).

Suddenly you’ve realized that this is going to take couple of hours to finish and you need to either exit current shell or logout from remote instance where you have your ssh connection established. Nobody wants to lose the time and work spent and in most cases you cannot just restart a process the proper way (see options below) due to the reasons:

- script is most likely not idempotent (in other words it cannot be launched multiple times producing same output results and not causing any additional side-effects)

- script is not designed to be resumed, it cannot pick up from the interrupted spot

- it will break consistency between your data when invoked repeteadly

- let’s face it: for simple scripts you don’t generally think about all the items above and do not handle all the possible corner cases

Solution

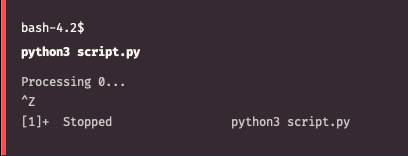

- Press

Ctrl-Zin active window. This will send SIGTSTP signal to your process causing it to suspend

- Type

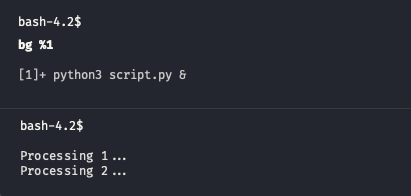

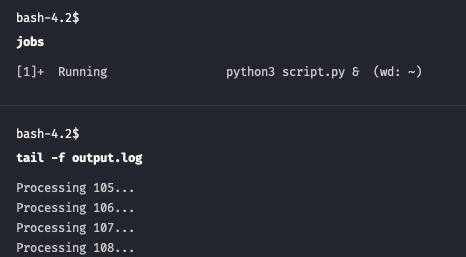

jobsin the same shell. This utility will list all jobs in current session.jobs -lwill also display process ID or any other information available

- Now we can use another utility called bg to start executing this job in background. Type

bg %1to continue running our process in the background. Note how we reference a job by prefixing its number with%sign

This would be enough if you process does not produce output to the terminal. Otherwise it can mess up a screen or in case messages are produced fast enough make it impossible to work properly within the session. You can type stty tostop to make sure that job will be stopped automatially once it writes to its standard output or standard error.

In the meanwhile we will use reredirect to dynamically redirect output of an already running process.

1 | $ git clone https://github.com/jerome-pouiller/reredirect.git |

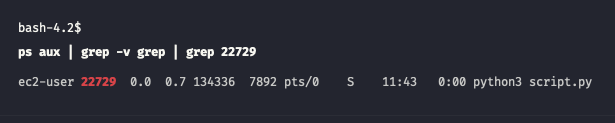

Use process identificator obtained from jobs -l and launch this command

1 | # reredirect -m [output-filename] [PID] |

You are still able to track progress and examine output with tail command tail -f output.log

- Last step in this entangled procedure is to make sure we can drop current active session without killing the process. This might be useful in case you plan to close ssh connection to the remote and then reconnect again for the updates. Shell has disown command which is designed for this purpose.

1 | $ disown %1 |

Note that referencing is exactly the same by the job number. However after detaching the process you will not see it within jobs output as current shell does not own this process anymore. Still, PID will remain the same and you can track progress by inspecting output with tail -f output.log

Meet nohup

If you want to follow a procedure described above from the very beginning you should first make sure all the output is written to a text file (to omit all the hacks with modifying processes at a runtime)

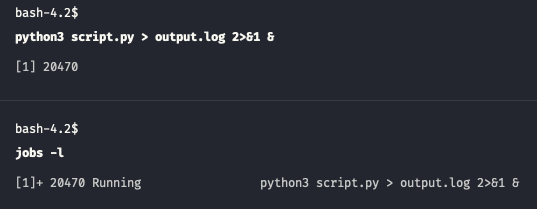

1 | $ poetry run python script.py > output.log 2>&1 |

In this command we redirect stdout to a output.log file and then pointing stderr to the same location where stdout goes.

Then you apply Ctrl-Z and bg trick to ensure process is running in the background. To simplify this you can initially add ampersand symbol (&) to the end of the command, so it will run as a backround process in the first place.

1 | $ poetry run python script.py > output.log 2>&1 & |

Note that you can always bring any process running in the background to foreground. fg does exactly this thing. Remember to refer the job by percentage sign and its number when invoking. fg %1 will send first job to the foreground and you can again bring it to the background at any time needed.

Next step would be to call disown on the job (like disown %1) to make sure we can safely close current shell session without interrupting our process.

Lastly, meet nohup utility which can also help us to omit this step if we plan to launch such a long-running background process. So, final version of the invocation of your command should always look like this

1 | # nohup [your command goes here] > output.log 2>&1 & |

This is the proper and safe way to execute process in the background and ensure it will not mess current session with its output or terminate unexpectedly when session is closed.

Use screen

There is a preferred way of running anything on the remote instance via ssh connection, so dropped connection will not affect work being done there. We can also use same tool for running background processes. First, install screen program

1 | # Depending on the system you run |

Next, type screen which will automatically move you into new session where you can simply run your script without any modifications

1 | $ python script.py |

and then detach from current screen session by typing Ctrl-A then D.

To check the list of all active screens (and you can launch as many of them as you like) type this

1 | $ screen -ls |

To restore back into your detached session you can type command below and check the progress and output of your command

1 | $ screen -r |

There is a lot more of this utility and it’s a really powerful tool to be used as a window manager / terminal multiplexer, but for the purpose of running a process in the background this should be enough. At this note I’m leaving you here with a bunch of extra links to check. Stay curious, fight for freedom 🇺🇦